CR: Alright guys...

Mercury is in retrograde until the 15th, but things don't completely clear up until the 30th! Watch your backs! (Traffic children!)

"In general, Mercury rules thinking and perception, processing and disseminating information and all means of communication, commerce, education and transportation. By extension, Mercury rules people who work in these areas, especially people who work with their minds or their wits: writers and orators, commentators and critics, gossips and spin doctors, teachers, travellers, tricksters and thieves.

Mercury retrograde gives rise to personal misunderstandings; flawed, disrupted, or delayed communications, negotiations and trade; glitches and breakdowns with phones, computers, cars, buses, and trains. And all of these problems usually arise because some crucial piece of information, or component, has gone astray or awry.

It is therefore not wise to make important decisions while Mercury is retrograde, since it is very likely that these decisions will be clouded by misinformation, poor communication and careless thinking. Mercury is all about mental clarity and the power of the mind, so when Mercury is retrograde these intellectual characteristics tend to be less acute than usual, as the critical faculties are dimmed. Make sure you pay attention to the small print!"

Tuesday, September 30, 2008

Option Explicit

Call polygon()

Sub polygon()

Dim object1, object2,arrdiv1, arrdiv2, arrcyl, arrstart(1), arrend(1), t, arrcyls

object1 = Rhino.GetObject("bottom polygon")

object2 = Rhino.GetObject ("top polygon")

arrdiv1 = Rhino.dividecurve(object1, 6, True)

arrdiv2 = Rhino.dividecurve(object2, 6, True)

arrcyl = Rhino.addcylinder(Array(0,0,0), Array (0,0,16.97), 0.3)

For t =0 To UBound(arrdiv1)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv1(t)

arrend(1)=arrdiv2(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

Call Rhino.DeleteObject(arrCyl)

arrcyls = Rhino.GetObjects("Select objects to mirror")

If IsArray(arrcyls) Then

Call Rhino.mirrorobjects(arrcyls, Array (0,0,0), Array (4.5,-2.6,0), True)

End If

End Sub

Call polygon()

Sub polygon()

Dim object1, object2,arrdiv1, arrdiv2, arrcyl, arrstart(1), arrend(1), t, arrcyls

object1 = Rhino.GetObject("bottom polygon")

object2 = Rhino.GetObject ("top polygon")

arrdiv1 = Rhino.dividecurve(object1, 6, True)

arrdiv2 = Rhino.dividecurve(object2, 6, True)

arrcyl = Rhino.addcylinder(Array(0,0,0), Array (0,0,16.97), 0.3)

For t =0 To UBound(arrdiv1)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv1(t)

arrend(1)=arrdiv2(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

Call Rhino.DeleteObject(arrCyl)

arrcyls = Rhino.GetObjects("Select objects to mirror")

If IsArray(arrcyls) Then

Call Rhino.mirrorobjects(arrcyls, Array (0,0,0), Array (4.5,-2.6,0), True)

End If

End Sub

Connecting Opposite Points

Option Explicit

'Script written by Savannah Bridge

'Script version Monday, September 29, 2008 10:52:17 PM

Call Main()

Sub Main()

Dim poly1, poly2, arrPoly1Points, arrPoly2Points, i, n

n=6

Rhino.Command "Polygon N=" & n & " w0,0,0 w0,12,0"

poly1 = Rhino.LastCreatedObjects()(0)

Rhino.Command "Polygon N=" & n & " w0,0,25 w0,12,25"

poly2 = Rhino.LastCreatedObjects()(0)

arrPoly1Points = Rhino.PolylineVertices(poly1)

arrPoly2Points = Rhino.PolylineVertices(poly2)

For i = 0 To n-1

Rhino.AddCylinder arrPoly1Points(i), arrPoly2Points((i+(n/2))Mod n), .25

Next

End Sub

'Script written by Savannah Bridge

'Script version Monday, September 29, 2008 10:52:17 PM

Call Main()

Sub Main()

Dim poly1, poly2, arrPoly1Points, arrPoly2Points, i, n

n=6

Rhino.Command "Polygon N=" & n & " w0,0,0 w0,12,0"

poly1 = Rhino.LastCreatedObjects()(0)

Rhino.Command "Polygon N=" & n & " w0,0,25 w0,12,25"

poly2 = Rhino.LastCreatedObjects()(0)

arrPoly1Points = Rhino.PolylineVertices(poly1)

arrPoly2Points = Rhino.PolylineVertices(poly2)

For i = 0 To n-1

Rhino.AddCylinder arrPoly1Points(i), arrPoly2Points((i+(n/2))Mod n), .25

Next

End Sub

Option Explicit

Call polygon()

Sub polygon()

Dim object1, object2,arrdiv1, arrdiv2, arrcyl, arrstart(1), arrend(1), t

object1 = Rhino.GetObject("bottom polygon")

object2 = Rhino.GetObject ("top polygon")

arrdiv1 = Rhino.dividecurve(object1, 6, True)

arrdiv2 = Rhino.dividecurve(object2, 6, True)

arrcyl = Rhino.addcylinder(Array(0,0,0), Array (0,0,16.97), 0.3)

For t =0 To UBound(arrdiv1)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv1(t)

arrend(1)=arrdiv2(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

For t =0 To UBound(arrdiv2)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv2(t)

arrend(1)=arrdiv1(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

Call Rhino.DeleteObject(arrCyl)

End Sub

Call polygon()

Sub polygon()

Dim object1, object2,arrdiv1, arrdiv2, arrcyl, arrstart(1), arrend(1), t

object1 = Rhino.GetObject("bottom polygon")

object2 = Rhino.GetObject ("top polygon")

arrdiv1 = Rhino.dividecurve(object1, 6, True)

arrdiv2 = Rhino.dividecurve(object2, 6, True)

arrcyl = Rhino.addcylinder(Array(0,0,0), Array (0,0,16.97), 0.3)

For t =0 To UBound(arrdiv1)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv1(t)

arrend(1)=arrdiv2(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

For t =0 To UBound(arrdiv2)-3

arrstart(0)=Array(0,0,0)

arrstart(1)=Array(0,0,1)

arrend(0)=arrdiv2(t)

arrend(1)=arrdiv1(t+3)

Call Rhino.OrientObject(arrcyl, arrstart, arrend, True)

Next

Call Rhino.DeleteObject(arrCyl)

End Sub

Monday, September 29, 2008

Stumbling through Rhino

i have been looking online for some really cool looking examples of rhinoscripting.

when I was stumbling (using StumbleUpon), I found another blog like this one.

http://rhinoscripting.blogspot.com/

check it out. It looks really interesting

when I was stumbling (using StumbleUpon), I found another blog like this one.

http://rhinoscripting.blogspot.com/

check it out. It looks really interesting

rhino polygon

Option Explicit

'Script written by Christian Jordan

'Script version Monday, September 29, 2008 11:42:58 AM

Call Polygon()

Sub Polygon()

Dim intSides, strPoint, x

intSides = Rhino.GetInteger("Number of sides", 6, 3, 20)

x = Rhino.GetInteger("point on X-Axis")

strPoint = " w" & x & ",0,0"

Rhino.Command "Polygon N=" & intSides & " w0,0,0" & strPoint

End Sub

'Script written by Christian Jordan

'Script version Monday, September 29, 2008 11:42:58 AM

Call Polygon()

Sub Polygon()

Dim intSides, strPoint, x

intSides = Rhino.GetInteger("Number of sides", 6, 3, 20)

x = Rhino.GetInteger("point on X-Axis")

strPoint = " w" & x & ",0,0"

Rhino.Command "Polygon N=" & intSides & " w0,0,0" & strPoint

End Sub

Thursday, September 25, 2008

this script is really stressing me out

im stressed out with the surface manipulation script. I can't figure out the "Ubound".

I am going to throw my monitor out the window.

Call movepts

Sub movepts

Dim strobject, y, n, arrpoints, grips

strobject = Rhino.GetObject("select plane")

Call Rhino.EnableObjectGrips(strobject)

grips = Rhino.selectobjectgrips(strobject)

'ReDim Preserve arrpoints(grips)

For n = 0 To UBound(arrpoints)-1

arrpoints = Rhino.objectgriplocation(strobject,n)

y = Rhino.objectgriplocation (strobject, n, Array(arrpoints(n)(0), arrpoints(n)(1),4*Rnd))

Next

End Sub

What am I doing wrong here?

I am going to throw my monitor out the window.

Call movepts

Sub movepts

Dim strobject, y, n, arrpoints, grips

strobject = Rhino.GetObject("select plane")

Call Rhino.EnableObjectGrips(strobject)

grips = Rhino.selectobjectgrips(strobject)

'ReDim Preserve arrpoints(grips)

For n = 0 To UBound(arrpoints)-1

arrpoints = Rhino.objectgriplocation(strobject,n)

y = Rhino.objectgriplocation (strobject, n, Array(arrpoints(n)(0), arrpoints(n)(1),4*Rnd))

Next

End Sub

What am I doing wrong here?

Wednesday, September 24, 2008

Some Scripts/Info

CR: Hey guys--

Here is a blog I found online (Institute for Advanced Architecture of Catalonia) with some interesting scripts on it--again, not much...but maybe helpful? Nice screenshots, thought it might be useful.

http://www.iaacblog.com/scripting/?cat=34

Here is a blog I found online (Institute for Advanced Architecture of Catalonia) with some interesting scripts on it--again, not much...but maybe helpful? Nice screenshots, thought it might be useful.

http://www.iaacblog.com/scripting/?cat=34

Tuesday, September 23, 2008

ControlPointArrange

Option Explicit

Call ControlPointArrange()

Sub ControlPointArrange()

'arrCount will hold 2 values that we'll retrieve later

'arrPoints is an array to hold the u*v amount of grid points

Dim arrCount(1), arrPoints(35), nCount, i, j

arrCount(0) = 6

arrCount(1) = 6

nCount = 0

'since we're starting at 0, we need to subtract 1 from the initial count

'this For Next Loop creates the grid of points (not visible - arrPoints just holds the points)

'Call Rhino.AddPoints(arrPoints) to see them

For i = 0 To arrCount(0) - 1

For j = 0 To arrCount(1) - 1

arrPoints(nCount) = Array(i*2, j*4, 0)

nCount = nCount + 1

Next

Next

'Call Rhino.AddPoints(arrPoints)

Call Rhino.AddSrfPtGrid(arrCount, arrPoints)

'----------------------------------------------------------------------------------------

Dim strObject, n

Dim arrGrips(), arrPoint, intGrips

strObject = Rhino.GetObject("Select objects")

'must enable grips before they can be manipulated

'intGrips is used to select the Object Grips of strObject

Call Rhino.EnableObjectGrips(strObject)

intGrips = Rhino.SelectObjectGrips(strObject)

'we have to resize the Array arrGrips() since we didn't declare a size

ReDim Preserve arrGrips(intGrips)

'since we start at 0, and arrGrips holds 36 points, we need to subtract 1 from the Upper Boundary of arrGrips

For n = 0 To UBound(arrGrips) - 1

'arrGrips is an Array that will hold the locations of the Grips of strObject

arrGrips(n) = Rhino.ObjectGripLocation(strObject, n)

'once we have an array that holds the original locations of the Grips

'we use the same Method to modify the locations

arrPoint = Rhino.ObjectGripLocation(strObject,n,Array(arrGrips(n)(0), arrGrips(n)(1),3*Rnd))

Next

End Sub

Call ControlPointArrange()

Sub ControlPointArrange()

'arrCount will hold 2 values that we'll retrieve later

'arrPoints is an array to hold the u*v amount of grid points

Dim arrCount(1), arrPoints(35), nCount, i, j

arrCount(0) = 6

arrCount(1) = 6

nCount = 0

'since we're starting at 0, we need to subtract 1 from the initial count

'this For Next Loop creates the grid of points (not visible - arrPoints just holds the points)

'Call Rhino.AddPoints(arrPoints) to see them

For i = 0 To arrCount(0) - 1

For j = 0 To arrCount(1) - 1

arrPoints(nCount) = Array(i*2, j*4, 0)

nCount = nCount + 1

Next

Next

'Call Rhino.AddPoints(arrPoints)

Call Rhino.AddSrfPtGrid(arrCount, arrPoints)

'----------------------------------------------------------------------------------------

Dim strObject, n

Dim arrGrips(), arrPoint, intGrips

strObject = Rhino.GetObject("Select objects")

'must enable grips before they can be manipulated

'intGrips is used to select the Object Grips of strObject

Call Rhino.EnableObjectGrips(strObject)

intGrips = Rhino.SelectObjectGrips(strObject)

'we have to resize the Array arrGrips() since we didn't declare a size

ReDim Preserve arrGrips(intGrips)

'since we start at 0, and arrGrips holds 36 points, we need to subtract 1 from the Upper Boundary of arrGrips

For n = 0 To UBound(arrGrips) - 1

'arrGrips is an Array that will hold the locations of the Grips of strObject

arrGrips(n) = Rhino.ObjectGripLocation(strObject, n)

'once we have an array that holds the original locations of the Grips

'we use the same Method to modify the locations

arrPoint = Rhino.ObjectGripLocation(strObject,n,Array(arrGrips(n)(0), arrGrips(n)(1),3*Rnd))

Next

End Sub

Move Control Points

SB:

Option Explicit

'Script written by Savannah Bridge

'Script version Sunday, September 21, 2008 9:04:47 PM

Call Main()

Sub Main()

Dim arrObject, arrGrips, i, x, y, z

arrObject = Rhino.GetObject ("Select Object:")

Rhino.EnableObjectGrips arrObject

arrGrips = Rhino.GetObjectGrips(,True,True)

For i = 0 To UBound (arrGrips)

x = arrGrips(i)(2)(0)

y = arrGrips(i)(2)(1)

z = Rnd * 10

Rhino.ObjectGripLocation arrGrips(i)(0), arrGrips(i)(1), Array(x,y,z)

Next

End Sub

Option Explicit

'Script written by Savannah Bridge

'Script version Sunday, September 21, 2008 9:04:47 PM

Call Main()

Sub Main()

Dim arrObject, arrGrips, i, x, y, z

arrObject = Rhino.GetObject ("Select Object:")

Rhino.EnableObjectGrips arrObject

arrGrips = Rhino.GetObjectGrips(,True,True)

For i = 0 To UBound (arrGrips)

x = arrGrips(i)(2)(0)

y = arrGrips(i)(2)(1)

z = Rnd * 10

Rhino.ObjectGripLocation arrGrips(i)(0), arrGrips(i)(1), Array(x,y,z)

Next

End Sub

Monday, September 22, 2008

Ius Chasma - Surface Manipulation

CJ: The manipulating agents and the location of this surface manipulation make it an interesting example of emergence. The source of the images is here:

http://www.nasa.gov/multimedia/imagegallery/image_feature_1177.html

Notice some of the words used to describe the "stratigraphic layers modified by wind and water": interchanging, layers, deposits, process,...

Naturally occurring systems/phenomena are valuable precedents that can be used as inspiration in our own scripted emergent systems.

http://www.nasa.gov/multimedia/imagegallery/image_feature_1177.html

Notice some of the words used to describe the "stratigraphic layers modified by wind and water": interchanging, layers, deposits, process,...

Naturally occurring systems/phenomena are valuable precedents that can be used as inspiration in our own scripted emergent systems.

Sunday, September 21, 2008

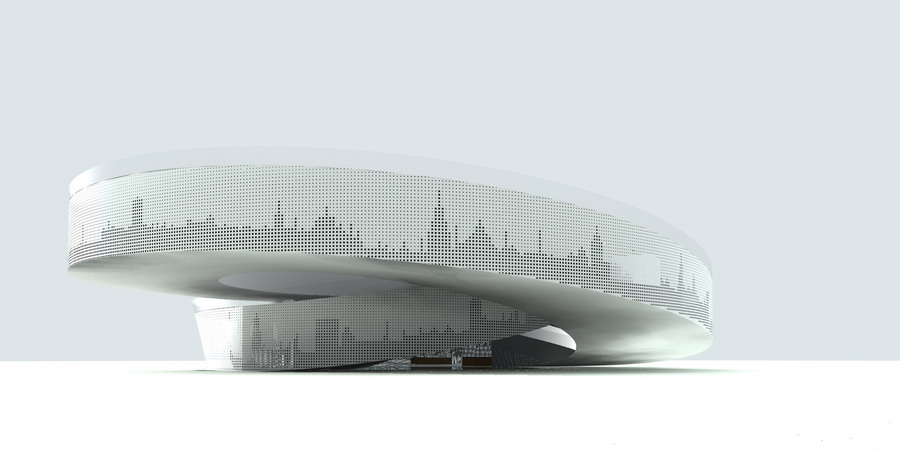

Danish Pavilion

Saturday, September 20, 2008

this is a link to a spoof video/feed of the 'big bang'/'dooms day' machine

http://www.liveleak.com/view?i=57f_1221333059

http://www.liveleak.com/view?i=57f_1221333059

Thursday, September 18, 2008

Goalies

SA: I just added Goalies to my Futbol Field, they're a little crude, mis-proportioned, and lack any hands or feet, and the numbers may need to be edited to fit your goals, but heres the script:

Dim sphHead, cylBod, cylArm1, CylArm2, CylLeg1, CylLeg2

sphHead = Rhino.AddSphere(Array(-170.2,0, 6), 1)

cylBod = Rhino.AddCylinder(Array(-170.2,0,5), Array(-170.2,0,2.5), 1)

cylArm1 = Rhino.AddCylinder(Array(-170.2,-0.5,5), Array(-170.2,-1.5,2), 0.5)

cylArm2 = Rhino.AddCylinder(Array(-170.2,0.5,5), Array(-170.2,1.5,2), 0.5)

cylLeg1 = Rhino.AddCylinder(Array(-170.2,0.5,2.5), Array(-170.2,0.5,0), 0.5)

cylLeg2 = Rhino.AddCylinder(Array(-170.2,-0.5,2.5), Array(-170.2,-0.5,0), 0.5)

Dim Goalie2Goalie2 = Rhino.MirrorObjects(Array(sphHead, cylBod, cylArm1, CylArm2,

CylLeg1, CylLeg2), Array(0,111.45,0), Array(0,-111.45,0), True)

Dim sphHead, cylBod, cylArm1, CylArm2, CylLeg1, CylLeg2

sphHead = Rhino.AddSphere(Array(-170.2,0, 6), 1)

cylBod = Rhino.AddCylinder(Array(-170.2,0,5), Array(-170.2,0,2.5), 1)

cylArm1 = Rhino.AddCylinder(Array(-170.2,-0.5,5), Array(-170.2,-1.5,2), 0.5)

cylArm2 = Rhino.AddCylinder(Array(-170.2,0.5,5), Array(-170.2,1.5,2), 0.5)

cylLeg1 = Rhino.AddCylinder(Array(-170.2,0.5,2.5), Array(-170.2,0.5,0), 0.5)

cylLeg2 = Rhino.AddCylinder(Array(-170.2,-0.5,2.5), Array(-170.2,-0.5,0), 0.5)

Dim Goalie2Goalie2 = Rhino.MirrorObjects(Array(sphHead, cylBod, cylArm1, CylArm2,

CylLeg1, CylLeg2), Array(0,111.45,0), Array(0,-111.45,0), True)

Tuesday, September 16, 2008

SS: Here is an excerpt , and the link, from and article by Tom Wiscombe, the founder of Emergent. Tom focuses on defining and explaining emergence not only as it occurs in nature but also why it is the name of the company. The journal entry stresses the need for complexity in the sense of freeing architectural process from the linear or additive routine. Its interesting how he connects biology, bio-mimicry and computation with architectural performance. This got me thinking of how readily computation in architecture can be a catalyst for sustainable design. After reading take a look at some of the projects.

__This century is going to be about biology. I don’t want to confuse architecture with biology. You can take analogies too far of course. But, as Gödel once said in his Theory of Incompleteness, sometimes to solve a problem in a particular discipline, you have to switch to completely different territory.

Architecture and biology at first glance do not appear to be so different—both are materially and organizationally based, both are concerned with morphology and structuring. Both are wound together by multiple simultaneous systems and drives, and probably most important for us here, both are constructed out of parts operating as collectives. While buildings, and to a lesser extent organisms(especially the human kind), may often be laden with content or meaning, that seems to be culturally transient and not particularily informative for either on the level of material dynamics and properties. Nevertheless, despite their parallels, some of the primary terms with which both architecture and biology are concerned turn out to be different in kind rather than degree: what architecture calls function, in the dogmatic sense, biology calls behavior. What architecture calls order, biology calls DNA scripting. Biology, it turns out, defines its processes dynamically and generatively, while architectural processes still tend to be understood as fixed and stable. Recent bio-theories on complex adaptive systems and especially the phenomena of emergence have begun to open up territory that architecture can no longer ignore if it is to have any relevance, and indeed resilience, in the future.__

Article http://www.emergentarchitecture.com/pdfs/OZJournal.pdf

Projects http://www.emergentarchitecture.com/projects.php?id=20

__This century is going to be about biology. I don’t want to confuse architecture with biology. You can take analogies too far of course. But, as Gödel once said in his Theory of Incompleteness, sometimes to solve a problem in a particular discipline, you have to switch to completely different territory.

Architecture and biology at first glance do not appear to be so different—both are materially and organizationally based, both are concerned with morphology and structuring. Both are wound together by multiple simultaneous systems and drives, and probably most important for us here, both are constructed out of parts operating as collectives. While buildings, and to a lesser extent organisms(especially the human kind), may often be laden with content or meaning, that seems to be culturally transient and not particularily informative for either on the level of material dynamics and properties. Nevertheless, despite their parallels, some of the primary terms with which both architecture and biology are concerned turn out to be different in kind rather than degree: what architecture calls function, in the dogmatic sense, biology calls behavior. What architecture calls order, biology calls DNA scripting. Biology, it turns out, defines its processes dynamically and generatively, while architectural processes still tend to be understood as fixed and stable. Recent bio-theories on complex adaptive systems and especially the phenomena of emergence have begun to open up territory that architecture can no longer ignore if it is to have any relevance, and indeed resilience, in the future.__

Article http://www.emergentarchitecture.com/pdfs/OZJournal.pdf

Projects http://www.emergentarchitecture.com/projects.php?id=20

Monday, September 15, 2008

Major Ass Archispeak

Ok...so this is so bizarre, off the wall, and almost impossible to understand. But, there's something to be said about the poetic way that both mental process and computer language are discussed in this writing--I'm not even sure what it is, exactly--it's quasi-obvious that it's a personally-oriented, specific project based piece (?), but interesting nonetheless. As the title of my post infers, just a whollllle lot of 'archispeak' (props to CJ for the terminology). (These people actually use the term 'postulate'...super fabulous.)

-CR

"Overview of the project “Blob computing”: A Blob is a generic primitive used to structure a uniform computing substrate into an easier-to-program parallel virtual machine. We find inherent limitations in the main trend of today’s parallel computing, and propose an alternative bio-inspired approach trying to combine both scalability and programmability. We seek to program a uniform computing medium such as 2D cellular automata, using two levels:

In the first “system level”, a local rule can maintain global connected regions called blobs. A blob is similar to a deformable elastic balloon filled with a gas of particles. Each hardware processing element can host one particle. Blobs are interconnected using specific link-particles. The rule can cause Blobs to move, duplicate or delete themselves. The rule can also propagate signals intra-blob, or inter-blob, like wave.

In the second “programmable level”, each particle contains an elementary piece of data and code, and can receive, process or send signals. The set of particles contained in a given blob encodes a user-programmed finite state automaton with output action (which are machine instruction) including divide-blob, merge-lob, duplicate-link, delete-link. Execution starts with a single ancestor blob that divides repeatedly, and generates a network of blobs.

The blob is designed to be a generic distributed-computing primitive installing a higher level virtual machine on top of a low level uniform computing medium. This virtual machine called “self developing automata network” has a number of properties related to parallelism. Moreover, its programming langage has been used in machine learning, since 1992 under the name of “cellular encoding”.

The following presentation summarizes the project challenges. We present the motivation, and state of the art. Next, we outline “self developing automata network” by listing its specific features that ensure programmability, parallelization, and performance. The fine grain blob machine is presented last, as the most promising option to fit self-development, while allowing scalability.

The “sequential dogma” obsolete: New technologies offer ever increasing hardware resources that make the traditional so called “sequential dogma” obsolete. Both our favoured programming languages and personal computers reflect a sequential dogma: on the software level, we use a step-by-step modification of a global state. On the hardware level, we partition the machine between a very big passive part: the memory and a very fast processing part: the processor. While this dogma was adapted to the early days of computers (it can be implemented with as little as 2250 transistors), it is likely to become obsolete as the numbers of resources increases (2 billion of transistors by 2005).

Parallel computing has inherent limitations. The parallel computers can currently exploit those resources, using parallelizing compilers. However they fail to combine scalability and general purpose programability. There exists already parallel machine model which can grow in size, as more hardware resources become available, and can solve bigger tasks quicker (scalability). However, the difficult problem that remains to solve is to make sure the machine can be programmed for a wide variety of tasks (programmability) and the programs can be compiled to execute in parallel (parallelization) without loosing too much time in communication (performance). Most existing approaches limit scalability (a few dozens of processors) and programmability (regular nested loops working on arrays). Parallelization is automated using intelligent compilation, and finally, a concrete performance result is obtained on existing machines. The sequential dogma is still there, both in the programming model, and in the machine execution model.

The brain does go beyond these limitations: The brain illustrates other architectural principles able to exploit massive parallel resources while also allowing a kind of general purpose capability. Biological systems in general are not inherently limited in scalability. Examples are: the molecular machinery of a cell (add more molecules), or the neurons of a brain (add more neurons). Furthermore, the brain does have the ability to perform a wide variety of tasks (programmability), involving sensing and actuating in the real world, that are very difficult for a traditional computer. We call that “real world computing”. We are interested at the architectural principles explaining the brain performances, rather than the machine learning capability which is more the “software level”. Indeed, those systems follow a truly non-sequential dogma: 1-The memory part and the computing part of each processing element are intimately mixed to the point that they cannot be separated; 2-Execution involves a stirring of every bits of informatio which is continuous, decentralized, non-deterministic and massively parallel. Another important principle needed for real world computing is the adequation between structure and functionality: the brain architecture itself i.e. the specific patterns of interconnections between the processing elements (the neurons) is adapted to the task being performed.

Exploring new architecture principles: The blob computing project explores the architecture principles of a scalable and programmable machine model. We postulate that in the long term, the increasing number of hardware resources will enable us to tackle real world computing. However, the price to pay is to throw away the sequential dogma, and adapt new architectural principles. Our research goal is to propose a machine model that can push scalability to some billions of Processing Elements, without limiting programmability to simulation or scientific computations. This goal is difficult; furthermore, the hardware resources needed are not yet available, so we are working on the theory. We have the concept underlying the machine. We focus on proving those concept either with mathematics, or by simulating building blocks - machine parts. The model includes original algorithms to achieve parallelization, with optimal theoretical performance results.

Programmability: a description that is both spatial and dynamic. The language specifies the parallel development of a network, node by node. Thus it describes an object existing in space, i.e a circuit, without sacrificing the capability of dynamic instantiation, needed for programmability. To exploit a huge amount of hardware resources, we need to develop computation in space, rather than in time, by reusing a bounded piece of hardware. We have to specify circuits which are spatially laid out, and implicitly parallel. However, few algorithms can be programmed into a fixed size, static, VLSI circuit. To provide more programmability, our language presents an added mechanism, allowing dynamic instantiation of circuits. It does not only describe circuits, but also how to develop them, from an initial ancestor node, that can repeatedly duplicate or delete itself, and its links. Moreover, the shape of the developed circuits is not restricted to the crystalline structure of the task graphs associated to affine nested loops. It is programmed and therefore adapted to the specific targeted functionality; we believe that the adequation between structure and function (a property of the brain) is the key to build “real world computing” machines. The programmability has two practical foundations: 1-We have developed a compiler from a high level language (PASCAL) 2- Numerous experiments demonstrate that it combines well with machine learning. It has also a theoretical foundation: it exhibits a property called “parallel universality”.

Parallelization: no parallelizing compiler is needed: the user has to “fold” a task graph into a “self-developing automata network” which is distributed by the machine itself, at run time. The state of the machine represents a circuit or network. More precisely the nodes of this network are not logic gates, but finite automata with output actions. The automata network is in fact self developing. The automaton's action can duplicate or delete itself (or its connections). These actions are directly machine instructions interpreted in hardware. As a result, the machine language itself keeps a semantic fully parallel:

1-The parallelism is exposed completely.

2-Communications are always local; an automaton communicates with its direct neighbors.

There is no shared memory, or even a global name space. Who communicates with who, this is thus clearly represented at any time by the network itself, and can be exploited by the machine to automatically map the network on its hardware, at run time. The parallelization effort is shared between the user and the machine. As a result, no intelligent compilation is needed for parallelization.

Performance can be theoretically optimal. Simulation of physical law continuously migrates data and code throughout the hardware so as to optimize communication latency and load balancing. The machine architecture has a 3D shape and its state represent an automata network in the underlying 3D space. Physical forces are simulated: Connections act as springs, pulling nearer any pair of communicating automata to reduce communication latency. Another repulsive force between automata homogenizes the density of automata distribution which naturally provoke load balancing. Such technique already exists, but here, the combination with a step by step development prevents the plague of local convergence. Indeed, the adjustment needed at each step is sufficiently simple, that automata are directly attracted towards their new optimal position. There are no sub-optimal basins of attraction in which in which to be taken in the trap. Assuming a set of reasonable hypothesis (already tested in some classic cases such as sort, or matrix multiply), the theory predicts optimal asymptotic performance results (up to a constant factor), on the condition of using the VLSI complexity.

Scalability implies fine grain. A fine grain machine better support the dynamic migration of code and data, and is more promising for hardware salability. A blob machine could be coarse grained: each Processing Elements (PE) would then simulate a subnet of the self-developing automata-network. However, we prefer the converse: a fine grained machine where each automaton is simulated by a set of PEs, forming a connected region called blob. First of all, a medium grain (one automaton per PE) would impose an upper bound on the size of each automaton. Second, because the data and the code of each automaton keeps moving across the PEs, it is crucial to minimize the associated communication cost. In the fine grained model, moving a blob can be done in a pipelined way, and using as many wires as the blob diameter. Third, the time cost taken for simulating the action of physical forces can be replaced by a hardware cost. Indeed, those forces are intrinsically local, and can be described by differential equation, of which a discrete form leads to simple local rules (like cellular automata) implemented directly by circuits in hardware . Fourthly, a fine grain model such as the amorphous machine has far more potential for scalability: A machine is seen as a fault tolerant computing medium, made of billions of small identical PEs, identically programmed, with only connections between nearby neighbors, without central control, nor requirement of synchrony or regular interconnect. The Nanotechnologies could offer such perspective. Finally, it is also an attractive scientific challenge to find out what is the smallest hardware building block enabling both scalability and programmability."

-CR

"Overview of the project “Blob computing”: A Blob is a generic primitive used to structure a uniform computing substrate into an easier-to-program parallel virtual machine. We find inherent limitations in the main trend of today’s parallel computing, and propose an alternative bio-inspired approach trying to combine both scalability and programmability. We seek to program a uniform computing medium such as 2D cellular automata, using two levels:

In the first “system level”, a local rule can maintain global connected regions called blobs. A blob is similar to a deformable elastic balloon filled with a gas of particles. Each hardware processing element can host one particle. Blobs are interconnected using specific link-particles. The rule can cause Blobs to move, duplicate or delete themselves. The rule can also propagate signals intra-blob, or inter-blob, like wave.

In the second “programmable level”, each particle contains an elementary piece of data and code, and can receive, process or send signals. The set of particles contained in a given blob encodes a user-programmed finite state automaton with output action (which are machine instruction) including divide-blob, merge-lob, duplicate-link, delete-link. Execution starts with a single ancestor blob that divides repeatedly, and generates a network of blobs.

The blob is designed to be a generic distributed-computing primitive installing a higher level virtual machine on top of a low level uniform computing medium. This virtual machine called “self developing automata network” has a number of properties related to parallelism. Moreover, its programming langage has been used in machine learning, since 1992 under the name of “cellular encoding”.

The following presentation summarizes the project challenges. We present the motivation, and state of the art. Next, we outline “self developing automata network” by listing its specific features that ensure programmability, parallelization, and performance. The fine grain blob machine is presented last, as the most promising option to fit self-development, while allowing scalability.

The “sequential dogma” obsolete: New technologies offer ever increasing hardware resources that make the traditional so called “sequential dogma” obsolete. Both our favoured programming languages and personal computers reflect a sequential dogma: on the software level, we use a step-by-step modification of a global state. On the hardware level, we partition the machine between a very big passive part: the memory and a very fast processing part: the processor. While this dogma was adapted to the early days of computers (it can be implemented with as little as 2250 transistors), it is likely to become obsolete as the numbers of resources increases (2 billion of transistors by 2005).

Parallel computing has inherent limitations. The parallel computers can currently exploit those resources, using parallelizing compilers. However they fail to combine scalability and general purpose programability. There exists already parallel machine model which can grow in size, as more hardware resources become available, and can solve bigger tasks quicker (scalability). However, the difficult problem that remains to solve is to make sure the machine can be programmed for a wide variety of tasks (programmability) and the programs can be compiled to execute in parallel (parallelization) without loosing too much time in communication (performance). Most existing approaches limit scalability (a few dozens of processors) and programmability (regular nested loops working on arrays). Parallelization is automated using intelligent compilation, and finally, a concrete performance result is obtained on existing machines. The sequential dogma is still there, both in the programming model, and in the machine execution model.

The brain does go beyond these limitations: The brain illustrates other architectural principles able to exploit massive parallel resources while also allowing a kind of general purpose capability. Biological systems in general are not inherently limited in scalability. Examples are: the molecular machinery of a cell (add more molecules), or the neurons of a brain (add more neurons). Furthermore, the brain does have the ability to perform a wide variety of tasks (programmability), involving sensing and actuating in the real world, that are very difficult for a traditional computer. We call that “real world computing”. We are interested at the architectural principles explaining the brain performances, rather than the machine learning capability which is more the “software level”. Indeed, those systems follow a truly non-sequential dogma: 1-The memory part and the computing part of each processing element are intimately mixed to the point that they cannot be separated; 2-Execution involves a stirring of every bits of informatio which is continuous, decentralized, non-deterministic and massively parallel. Another important principle needed for real world computing is the adequation between structure and functionality: the brain architecture itself i.e. the specific patterns of interconnections between the processing elements (the neurons) is adapted to the task being performed.

Exploring new architecture principles: The blob computing project explores the architecture principles of a scalable and programmable machine model. We postulate that in the long term, the increasing number of hardware resources will enable us to tackle real world computing. However, the price to pay is to throw away the sequential dogma, and adapt new architectural principles. Our research goal is to propose a machine model that can push scalability to some billions of Processing Elements, without limiting programmability to simulation or scientific computations. This goal is difficult; furthermore, the hardware resources needed are not yet available, so we are working on the theory. We have the concept underlying the machine. We focus on proving those concept either with mathematics, or by simulating building blocks - machine parts. The model includes original algorithms to achieve parallelization, with optimal theoretical performance results.

Programmability: a description that is both spatial and dynamic. The language specifies the parallel development of a network, node by node. Thus it describes an object existing in space, i.e a circuit, without sacrificing the capability of dynamic instantiation, needed for programmability. To exploit a huge amount of hardware resources, we need to develop computation in space, rather than in time, by reusing a bounded piece of hardware. We have to specify circuits which are spatially laid out, and implicitly parallel. However, few algorithms can be programmed into a fixed size, static, VLSI circuit. To provide more programmability, our language presents an added mechanism, allowing dynamic instantiation of circuits. It does not only describe circuits, but also how to develop them, from an initial ancestor node, that can repeatedly duplicate or delete itself, and its links. Moreover, the shape of the developed circuits is not restricted to the crystalline structure of the task graphs associated to affine nested loops. It is programmed and therefore adapted to the specific targeted functionality; we believe that the adequation between structure and function (a property of the brain) is the key to build “real world computing” machines. The programmability has two practical foundations: 1-We have developed a compiler from a high level language (PASCAL) 2- Numerous experiments demonstrate that it combines well with machine learning. It has also a theoretical foundation: it exhibits a property called “parallel universality”.

Parallelization: no parallelizing compiler is needed: the user has to “fold” a task graph into a “self-developing automata network” which is distributed by the machine itself, at run time. The state of the machine represents a circuit or network. More precisely the nodes of this network are not logic gates, but finite automata with output actions. The automata network is in fact self developing. The automaton's action can duplicate or delete itself (or its connections). These actions are directly machine instructions interpreted in hardware. As a result, the machine language itself keeps a semantic fully parallel:

1-The parallelism is exposed completely.

2-Communications are always local; an automaton communicates with its direct neighbors.

There is no shared memory, or even a global name space. Who communicates with who, this is thus clearly represented at any time by the network itself, and can be exploited by the machine to automatically map the network on its hardware, at run time. The parallelization effort is shared between the user and the machine. As a result, no intelligent compilation is needed for parallelization.

Performance can be theoretically optimal. Simulation of physical law continuously migrates data and code throughout the hardware so as to optimize communication latency and load balancing. The machine architecture has a 3D shape and its state represent an automata network in the underlying 3D space. Physical forces are simulated: Connections act as springs, pulling nearer any pair of communicating automata to reduce communication latency. Another repulsive force between automata homogenizes the density of automata distribution which naturally provoke load balancing. Such technique already exists, but here, the combination with a step by step development prevents the plague of local convergence. Indeed, the adjustment needed at each step is sufficiently simple, that automata are directly attracted towards their new optimal position. There are no sub-optimal basins of attraction in which in which to be taken in the trap. Assuming a set of reasonable hypothesis (already tested in some classic cases such as sort, or matrix multiply), the theory predicts optimal asymptotic performance results (up to a constant factor), on the condition of using the VLSI complexity.

Scalability implies fine grain. A fine grain machine better support the dynamic migration of code and data, and is more promising for hardware salability. A blob machine could be coarse grained: each Processing Elements (PE) would then simulate a subnet of the self-developing automata-network. However, we prefer the converse: a fine grained machine where each automaton is simulated by a set of PEs, forming a connected region called blob. First of all, a medium grain (one automaton per PE) would impose an upper bound on the size of each automaton. Second, because the data and the code of each automaton keeps moving across the PEs, it is crucial to minimize the associated communication cost. In the fine grained model, moving a blob can be done in a pipelined way, and using as many wires as the blob diameter. Third, the time cost taken for simulating the action of physical forces can be replaced by a hardware cost. Indeed, those forces are intrinsically local, and can be described by differential equation, of which a discrete form leads to simple local rules (like cellular automata) implemented directly by circuits in hardware . Fourthly, a fine grain model such as the amorphous machine has far more potential for scalability: A machine is seen as a fault tolerant computing medium, made of billions of small identical PEs, identically programmed, with only connections between nearby neighbors, without central control, nor requirement of synchrony or regular interconnect. The Nanotechnologies could offer such perspective. Finally, it is also an attractive scientific challenge to find out what is the smallest hardware building block enabling both scalability and programmability."

Sunday, September 14, 2008

Thursday, September 11, 2008

SA: I was looking around for different scripts just to see if I could read and make sense of them. Here is a link to a site with different scripts, some more complex than others. http://www.pdwiki.org/index.php?title=Rhino_Script_Library

Monday, September 8, 2008

I found a picture on Urban Organisation.com. It is an interesting look on modeling in context. What do you think?

SS

--> Castelmola art gallery, Taormina

The gallery occupies a hill top site and will house the art work produced over a time by artist that come to stay for retreats. The collected art pieces are to be housed in an ever growing collection that will be open to the public.

The gallery occupies a hill top site and will house the art work produced over a time by artist that come to stay for retreats. The collected art pieces are to be housed in an ever growing collection that will be open to the public.

Friday, September 5, 2008

Computation Concerns

CR: Hey friends--I found a really interesting article below. I think it's worth a read. I'm in no way trying to be 'that person' who disagrees with things for the sake of disagreement. I'm thrilled about learning script and consumed by the idea of computative possibility. But...I have never separated architecture from the field of fine art, and I think that design itself is an incredibly powerful tool that can have both artistic and social implications. As much as I embrace our field's technological advancement, I wonder when we cross over from responsible design that affects the human experience to an unfiltered show-and-tell. The article below discusses the persistent historical connections between architecture & society and government. If our growth in the fields of science and technology are in fact incontestantly linked to our ability to produce more impressive and successful designs, then we may be involved in a legitimate artistic revolution--one that can activate designers and architects to create structures that not only greatly impact the urban fabric (while at the same time not degenerating it), but also stimulate the human mind.

It's stimulanguage! (#28-Make new words. Expand the lexicon...) :)

Cultural Concerns in Computational ArchitectureG. Holmes Perkins, 1904-2004In September of 2004 I attended two events that reflect on each other. One was the Non-Standard Praxis conference held at MIT. The other was a memorial at the University of Pennsylvania for G. Holmes Perkins, my dean when I was an architecture student at Penn from 1959 to 1966. He had died just a couple of weeks short of his 100th birthday.The “Non-Standard” in Non-Standard Praxis refers ambiguously to Nonstandard Analysis in mathematics and to emerging computational approaches in architecture. Some of the phrases in titles of presentations at the conference include: performativity, topologies, virtual standardization, amorphous space, hyperbody research, immaterial limits, affective space, algorithmic flares, the digital surrational, the boundaries of an event-space interation, bi-directional design process, and voxel space.The architecture shown was for the most part either generated with 3D animation software or by computational algorithms. Much of it exists only as images, or it has been CNC milled from foam and coated. Much of the work is what has been categorized as “blob” architecture. As would be expected, when built buildings were presented, they were usually less radical than the unbuilt designs.So yes, there was a lot of impracticality and pretension, and a lot of stuff that is not architecture if you define architecture as something you might be able to build with the technologies available in the foreseeable future. But non-the-less, most of the work was extremely interesting, and suggests we are in a highly innovative period in architecture. The architects and students who presented were imaginative, knowledgeable, and committed to bringing the revolutionary power of the computer into architecture at the most fundamental levels.But I was struck by something that was not addressed at the Non-Standard Praxis conference that was suggested by Dean Perkins’ memorial.Perkins had been a young modern architect in the 1930s, and had been the chair of city planning at Harvard while Gropius was chair of architecture. In 1951 Perkins was brought to Penn to rebuild the school. He began with the premise that a school of design must include city planning and landscape architecture along with architecture. The landscape and planning departments he built were among the strongest of the 20th century, but the architecture department became even better known as “The Philadelphia School.” Perkins brought to the school Louis Kahn, Robert Venturi, Denise Scott Brown, Robert Geddes, Louis Mumford, Robert Le Ricolais, Edmund Bacon, and many others. In many cases these people were not known when Perkins hired them but developed in the supportive atmosphere he provided. And The Philadelphia School was not just personalities, but a strong curriculum based on a integrated approach to architecture and a unique synergy of culture, school, city, and profession.Perkins had always said that architecture must have an ethical, social and aesthetic component, otherwise it is just fashion. This attitude was lacking at the Non-Standard Praxis conference and among many of today’s computational architects.Perkins was a traditional modernist in all of the best senses. That is the modernism of clarity, social responsibility, a realization that we need to address cities and regional ecology as well as monuments, and that modernism in architecture is a part of a new culture of industrialism and democracy. In short the modernism not only of Mies, Corbu and Gropius, but also of CIAM and Americans like Lewis Mumford and Clarence Stein. Perkins and the Philadelphia School held Mumford’s position that “Architecture, properly understood, is civilization itself.” Perkins and his school based architecture on historical traditions and our current culture and the criticisms of it.Why is this approach absent among many architects today? There is a claim that Modern Architecture failed in its attempt to address social concerns as evinced by the 1975 dynamiting of Pruitt-Igo. But the failure of certain public housing projects is not the failure of Modern Architecture, which was in fact a major success.Modern Architecture was an integral part of the modern culture that brought about a more egalitarian society, a radical increase in lifespan, a diminishment of infant mortality, an education for the average person that exceeds that of Renaissance nobles, and access to the secrets of the atom and the universe. Modern Architecture provided the homes, offices, factories, laboratories, schools, institutions, and cities in which all of this was realized.Over the past two decades many architects, perhaps most notably John Hejduk and Peter Eisenman, have challenged the notion that architecture has social responsibilities. They took this position in response to movements in the 1970s that saw architecture as a branch of social advocacy. But it would be a mistake to think that Eisenman and Hejduk do not have cultural concerns. They both set out to reintroduce Architecture as the central concern of architecture. This is to say aesthetics as a concern with the deepest human meanings. For example, far from being isolated from culture, Eisenman is deeply immersed the postmodern condition, and the various phases of his work are in response to the culture of the time, be it underlying linguistic structures, discordance, or a resolution of chaotic disjuncture.It is this sense of setting in a culture that I found missing at Non-Standard Praxis. We are now in one of the most significant periods of cultural and technological change in history, probably greater in scope than those associated with Newton and Einstein. Developments in quantum mechanics are leading to quantum computers that gain their prodigious power through harnessing their siblings throughout the multiverse. Biotech and genetic engineering are bringing about new species and perhaps the alteration of homo sapiens. Materials engineering and nanotechnology are altering the object we use and how they are made. Communications technologies promise that eventually everything can be connected to everything. Cosmologists place us in ever expanding infinities of multiple universes. And individuals will have unprecedented opportunities for education, knowledge and achievement and the prospect of cognitive powers we cannot yet imagine.The ribbed vault was not just a structural technique, but also a means of putting the human soul in touch with God. Perspective was not just a means of organizing pictorial space, but also a means of asserting the human observer. Industrial materials were not just economical, but also a means of finding the human place in a democratic world.In like manner, we should consider Maya, 3D Studio MAX and computational algorithms not just tools in themselves, but as means of engaging our new world.

It's stimulanguage! (#28-Make new words. Expand the lexicon...) :)

Cultural Concerns in Computational ArchitectureG. Holmes Perkins, 1904-2004In September of 2004 I attended two events that reflect on each other. One was the Non-Standard Praxis conference held at MIT. The other was a memorial at the University of Pennsylvania for G. Holmes Perkins, my dean when I was an architecture student at Penn from 1959 to 1966. He had died just a couple of weeks short of his 100th birthday.The “Non-Standard” in Non-Standard Praxis refers ambiguously to Nonstandard Analysis in mathematics and to emerging computational approaches in architecture. Some of the phrases in titles of presentations at the conference include: performativity, topologies, virtual standardization, amorphous space, hyperbody research, immaterial limits, affective space, algorithmic flares, the digital surrational, the boundaries of an event-space interation, bi-directional design process, and voxel space.The architecture shown was for the most part either generated with 3D animation software or by computational algorithms. Much of it exists only as images, or it has been CNC milled from foam and coated. Much of the work is what has been categorized as “blob” architecture. As would be expected, when built buildings were presented, they were usually less radical than the unbuilt designs.So yes, there was a lot of impracticality and pretension, and a lot of stuff that is not architecture if you define architecture as something you might be able to build with the technologies available in the foreseeable future. But non-the-less, most of the work was extremely interesting, and suggests we are in a highly innovative period in architecture. The architects and students who presented were imaginative, knowledgeable, and committed to bringing the revolutionary power of the computer into architecture at the most fundamental levels.But I was struck by something that was not addressed at the Non-Standard Praxis conference that was suggested by Dean Perkins’ memorial.Perkins had been a young modern architect in the 1930s, and had been the chair of city planning at Harvard while Gropius was chair of architecture. In 1951 Perkins was brought to Penn to rebuild the school. He began with the premise that a school of design must include city planning and landscape architecture along with architecture. The landscape and planning departments he built were among the strongest of the 20th century, but the architecture department became even better known as “The Philadelphia School.” Perkins brought to the school Louis Kahn, Robert Venturi, Denise Scott Brown, Robert Geddes, Louis Mumford, Robert Le Ricolais, Edmund Bacon, and many others. In many cases these people were not known when Perkins hired them but developed in the supportive atmosphere he provided. And The Philadelphia School was not just personalities, but a strong curriculum based on a integrated approach to architecture and a unique synergy of culture, school, city, and profession.Perkins had always said that architecture must have an ethical, social and aesthetic component, otherwise it is just fashion. This attitude was lacking at the Non-Standard Praxis conference and among many of today’s computational architects.Perkins was a traditional modernist in all of the best senses. That is the modernism of clarity, social responsibility, a realization that we need to address cities and regional ecology as well as monuments, and that modernism in architecture is a part of a new culture of industrialism and democracy. In short the modernism not only of Mies, Corbu and Gropius, but also of CIAM and Americans like Lewis Mumford and Clarence Stein. Perkins and the Philadelphia School held Mumford’s position that “Architecture, properly understood, is civilization itself.” Perkins and his school based architecture on historical traditions and our current culture and the criticisms of it.Why is this approach absent among many architects today? There is a claim that Modern Architecture failed in its attempt to address social concerns as evinced by the 1975 dynamiting of Pruitt-Igo. But the failure of certain public housing projects is not the failure of Modern Architecture, which was in fact a major success.Modern Architecture was an integral part of the modern culture that brought about a more egalitarian society, a radical increase in lifespan, a diminishment of infant mortality, an education for the average person that exceeds that of Renaissance nobles, and access to the secrets of the atom and the universe. Modern Architecture provided the homes, offices, factories, laboratories, schools, institutions, and cities in which all of this was realized.Over the past two decades many architects, perhaps most notably John Hejduk and Peter Eisenman, have challenged the notion that architecture has social responsibilities. They took this position in response to movements in the 1970s that saw architecture as a branch of social advocacy. But it would be a mistake to think that Eisenman and Hejduk do not have cultural concerns. They both set out to reintroduce Architecture as the central concern of architecture. This is to say aesthetics as a concern with the deepest human meanings. For example, far from being isolated from culture, Eisenman is deeply immersed the postmodern condition, and the various phases of his work are in response to the culture of the time, be it underlying linguistic structures, discordance, or a resolution of chaotic disjuncture.It is this sense of setting in a culture that I found missing at Non-Standard Praxis. We are now in one of the most significant periods of cultural and technological change in history, probably greater in scope than those associated with Newton and Einstein. Developments in quantum mechanics are leading to quantum computers that gain their prodigious power through harnessing their siblings throughout the multiverse. Biotech and genetic engineering are bringing about new species and perhaps the alteration of homo sapiens. Materials engineering and nanotechnology are altering the object we use and how they are made. Communications technologies promise that eventually everything can be connected to everything. Cosmologists place us in ever expanding infinities of multiple universes. And individuals will have unprecedented opportunities for education, knowledge and achievement and the prospect of cognitive powers we cannot yet imagine.The ribbed vault was not just a structural technique, but also a means of putting the human soul in touch with God. Perspective was not just a means of organizing pictorial space, but also a means of asserting the human observer. Industrial materials were not just economical, but also a means of finding the human place in a democratic world.In like manner, we should consider Maya, 3D Studio MAX and computational algorithms not just tools in themselves, but as means of engaging our new world.

arch324

CJ: we are all expected to contribute to the collective learning of the class, and in this forum there is no difference. Please chose a unique (readable) color and use your initials when you post.

9. Begin anywhere. John Cage tells us that not knowing where to begin is a common form of paralysis. His advice: begin anywhere.

...anywhere

10. Everyone is a leader. Growth happens. Whenever it does, allow it to emerge. Learn to follow when it makes sense. Let anyone lead.

anyone...

9. Begin anywhere. John Cage tells us that not knowing where to begin is a common form of paralysis. His advice: begin anywhere.

...anywhere

10. Everyone is a leader. Growth happens. Whenever it does, allow it to emerge. Learn to follow when it makes sense. Let anyone lead.

anyone...

Subscribe to:

Comments (Atom)